February 17, 2021

Sumitomo Mitsui Financial Group, Inc.

The Japan Research Institute, Limited

JSOL Corporation

Allganize, Inc.

About the practical application of a unique Natural Language Processing AI with incorporation of Silicon Valley Digital Innovation Lab

TOKYO, February 17, 2021 --- Sumitomo Mitsui Financial Group, Inc. (President and Group CEO: Jun Ohta; the group is collectively referred to as the "SMBC Group") will start using an AI system (hereinafter the “System”) that incorporates the advanced natural language processing technology*1 in the first half of 2021. The system is developed jointly by SMBC Group, The Japan Research Institute, Limited (President and CEO: Katsunori Tanizaki), JSOL Corporation (President: Masatoshi Maekawa) and Allganize, Inc. (Founder and CEO: Changsu Lee; hereinafter “Allganize”).

The System will first be used by the call centers of SMBC Nikko Securities Inc. (President: Yuichiro Kondo) and Sumitomo Mitsui Card Co., Ltd. (President: Yukihiko Onishi) to help ensure that operators can make quicker, more accurate responses to customer inquiries.

Up to this point, SMBC Group has been using AI systems to achieve better operational efficiency. However, SMBC Group has been considering the introduction of new solutions to further enhance efficiency. Meanwhile, Silicon Valley Digital Innovation Lab (which was previously set up to conduct advanced technology surveys on the West Coast of the United States) became aware of a unique natural language processing technology developed by U.S. startup Allganize, Inc.

BERT*2 is the de facto standard for advanced natural language processing and has attracted a great deal of attention for its high accuracy. However, training BERT is computationally intensive and expensive due to its complexity and the large amount of training data that needs to be processed to achieve a high accuracy.

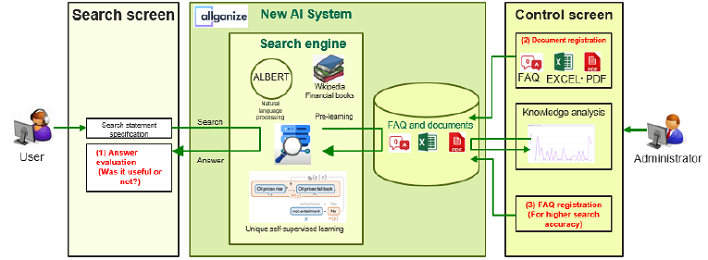

The natural language processing technology developed by Allganize for the System combines a proprietary language model based on BERT utilizing the pre-training method of ALBERT*3 with self-supervised learning technology specialized in question-answering unique to Allganize.*4 This processing technology reduces the amount of data and time required for training as well as the cost of specialized hardware setups. In the proof-of-concept verification by the SMBC Group, it was confirmed that the learning workload is significantly reduced compared to a conventional system. The SMBC Group’s practical application of such natural language processing technology will be the first use case among domestic financial groups.

In addition to support for the operators at call centers, we are planning to expand the application of the System to services throughout the entire SMBC Group. Going forward, the SMBC Group will continue to work together to actively utilize cutting-edge technology to improve operational efficiency.

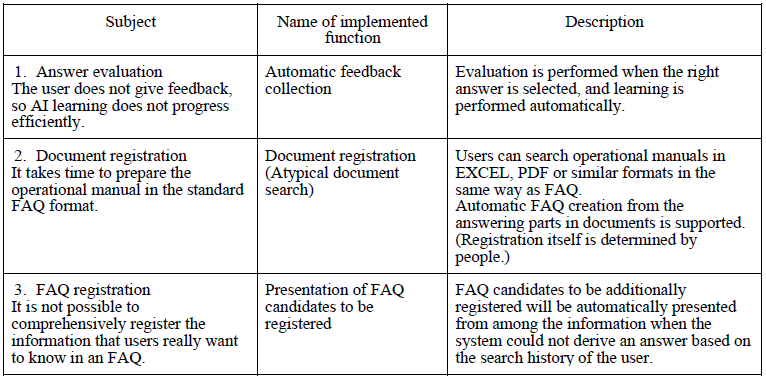

(Reference) The System has revolutionary functions to resolve following issues related to train inquiries responding system: 1) Answer evaluation, 2) Document registration, and 3) FAQ registration.

*1. Natural language processing

A term for the overall technology that involves the input of natural language (the language used by humans for daily communication) into a computer and the processing of information depending on the purpose. It has a wide range of applications such as Japanese input, search engines, machine translation, and AI speakers.

*2. BERT

Abbreviation for Bidirectional Encoder Representations from Transformers. It was first mentioned in a paper by Jacob Devlin, et al., of Google in October 2018. This AI technology falls under the category of "language model," and it predicts the "naturalness of language" for sentences. The prediction of the naturalness of language is determined by an AI-assisted analysis concerning word-to-word and sentence-to-sentence relationships, as well as which words should follow which other words. In addition, BERT is receiving a great deal of attention because it can be applied to various tasks such as responding to inquiries, searching documents, and summarizing documents. These are made possible through prior learning about the features of words and sentences that can be applied universally.

*3. ALBERT

Abbreviation for A Lite BERT. A lightweight model of BERT released on arXiv on October 23,

2019 (ver. 2).

*4. Self-supervised learning

A technology that enables the automated learning of data characteristics by simply entering the data. Since the tendency of the user's evaluation can be learned from input data without those (explicit) evaluation, it is not necessary to manually judge the correctness of each datum before inputting.